Teaching Quality from the Students’ Perspective: Using Teaching Analysis Poll (TAP) for Course Evaluations

By: Birgit Hawelka, the Director of the Center for University and Academic Teaching at the University of Regensburg, Germany.

See also: https://lehrblick.de/

Course evaluations are an integral part of quality assurance at German universities. Students at the University of Regensburg (as at most German universities) evaluate the quality of their courses at the end of each semester using a standardized questionnaire.

Course evaluations – more than a tedious chore?

Not all lecturers are happy with this approach. Points of criticism are e.g.

- Courses are very heterogeneous in terms of students, implementation, and objectives. The standardized questionnaires do not provide adequate insight into course strengths and weaknesses.

- It is difficult for instructors to draw conclusions about how to improve their courses based on the reported results. When you receive a score of 2.3 for your explanations, what does that mean? Is that good? Or bad? Are there any improvements you could make? An open comment section at the end of a questionnaire is often viewed as much more valuable.

- Lecturers assume that students do not differentiate between distinct factors, but rather to give a verdict on how satisfied they are with the course as a whole.

Students, however, are also unsatisfied with this standardized approach. Every semester, they are asked to evaluate a number of courses within one week, often using the same questionnaire. As a result, many of them experience evaluation fatigue. They may also be demotivated because they are unclear about what conclusions are drawn from the evaluations.

Many lecturers at the University of Regensburg (Germany) have therefore adopted Teaching Analysis Poll (Frank & Kaduk, 2017) over standardized evaluation as an alternative way of evaluating courses.

Teaching Analysis Poll (TAP): A qualitative method for course evaluation

TAPs are usually conducted in the middle of the semester. There are several ways to conduct Teaching Analysis Polls. In the following steps, we outline how this method is implemented at the University of Regensburg.

Step 1: Survey

The lecturer ends the seminar session 20 minutes early and leaves the room. An external evaluator, which might be a colleague, academic developer, or trained student, come in and conduct the survey. Two open questions are posed to the students:

1) In this specific course, what aspects of classroom instruction facilitate your learning?

2) What aspects of this course hinder your learning?

Step 2: Discussion and Documentation

Students discuss these questions in small groups and write down their thoughts. Afterwards, the evaluator presents the responses from each group to the entire class for further discussion.

The evaluator clarifies any ambiguous or misleading statements made by students and rephrases them in more instructional terms. For example, a group of students providing “only student presentations” as feedback could be interpreted in two different ways: (1) the students find the course design boring because only student presentations are used as teaching methods, or (2) the students want more input and explanations from the instructor, rather than just relying on peer presentations.

Evaluators are responsible for clarifying ambiguous statements such as this.

Step 3: Classification and data analysis

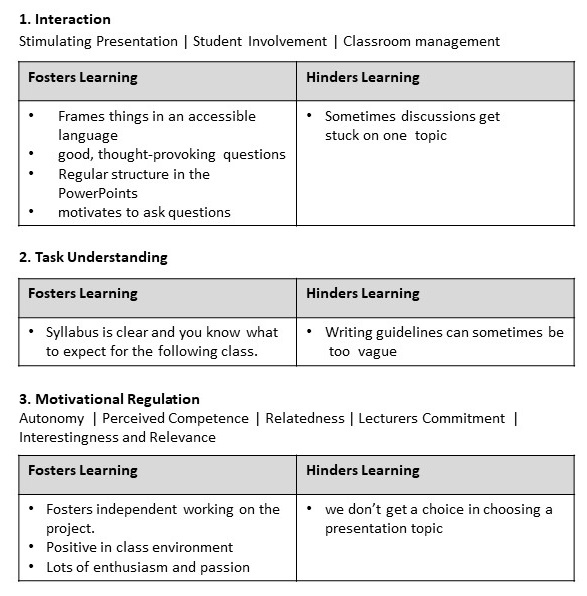

Student feedback is classified and analyzed by the evaluator after the course has ended. Using a coding manual (Hawelka, 2019), the evaluator organizes the students’ feedback by instructional criteria.

Step 4: Feedback

A report with the results of the student feedback is emailed to instructors. By using a coding system, the instructors can easily see the strengths and weaknesses of their course based on the feedback they receive (see figure).

Step 5: Consultation

In a follow-up meeting, lecturer and evaluator collaborate to come up with ideas for addressing the student feedback and improving the course. This type of “consultative feedback” (Penny & Coe, 2004) has been shown to be an effective method for enhancing teaching effectiveness.

The following statements from lecturers at the University of Regensburg demonstrate how they assess the benefits of this methodology.

- “TAPs allow students and teachers to communicate constructively about teaching.”

- “The results of the TAP motivated me to improve certain aspects of my course.”

- “I have seen a significant improvement in my course after implementing the results.”

- “The TAP process has provided me with a lot of valuable feedback.”

- “TAP has also made me realize that my commitment to teaching is appreciated, which is very motivating.”

This method has been shown to be effective at the University of Regensburg: It can reveal a course’s strengths and weaknesses as well. Within their courses, lecturers can develop direct approaches that facilitate student learning. By linking evaluation and (peer)consultation, it has proved to be an effective method for enhancing the quality of teaching.

References

Frank, A. & Kaduk, S. (2017). Lehrveranstaltungsevaluation als Ausgangspunkt für Reflexion und Veränderung. Teaching Analysis Poll (TAP) und Bielefelder Lernzielorientierte Evaluation (BiLOE). In Qm-Systeme in Entwicklung: Change (or) Management? : Tagungsband der 15. Jahrestagung des Arbeitskreises Evaluation und Qualitätssicherung der Berliner und Brandenburger Hochschulen (S. 39-52). Freie Universität Berlin.

Hawelka, B. (2019). Coding Manual for Teaching Analysis Polls. Centre for University and Academic Teaching, Regensburg. https://www.uni-regensburg.de/assets/zentrum-hochschul-wissenschaftsdidaktik/forschung/manual-tap-2019.pdf

Penny, A.& Coe, R. (2004). Effectiveness of Consultation on Student Ratings Feedback: A Meta‐Analysis. Review of Educational Research, 74(2), 215–253.